We’ve recently released a new product. There’s no shortage of marketing or technical information about that.

What I want to talk about today is the fun we had making it.

Tapestry was a challenge on many fronts, but I’ve found that if you add a bit of humor and mischief to development, it helps get past the day-to-day frustrations you encounter. It’s hard to be pissed off when you’re laughing.

The spinner

It all started with a fidget spinner. As we were getting our first beta release ready, Ged wanted a badge at the bottom of the timeline that said // BETA //. The initial release was functional, but there were a lot of rough edges that we knew needed smoothing. So a label there was.

On a Sunday afternoon I decided to have a little fun. A couple of hours later, our new badge recognized touches and had a very springy animation. And I didn’t tell anyone, not even my wife. That secrecy was hard, but the success of the gag depended on it.

But as soon as the people downloaded that first beta, we started getting comments like “I love the spinner!”. And no one on the company Slack had any idea what was going on until I said “tap the beta badge”.

Showing your first release to other folks is always full of surprises, even when it’s self-inflicted!

The spinner also ended up being used to test our error reporting mechanism. If you tapped it too often, which many people did, there was a message that you needed to ZAP the PRAM.

Yep, still having fun.

The disco

One of our beta testers, Joline Celebrion, is a huge fan of our iconography. More than once, she asked on our Patreon Discord about the arrival of alternate app icons.

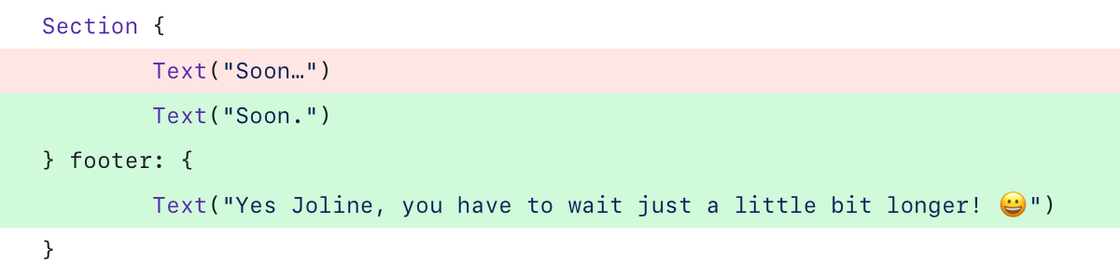

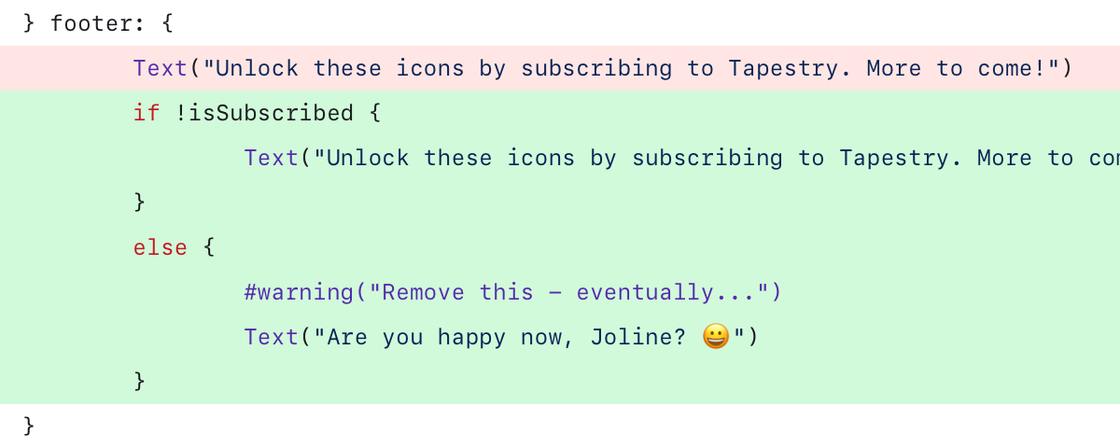

A couple of weeks before they were ready, I added this bit of code to settings under the “App Icon” category:

I knew she’d immediately see the new category and open it excitedly, only to see a message that they were imminent. Teasing is only fun when you follow through, so in the next week’s build there was this footer below a large selection of icons:

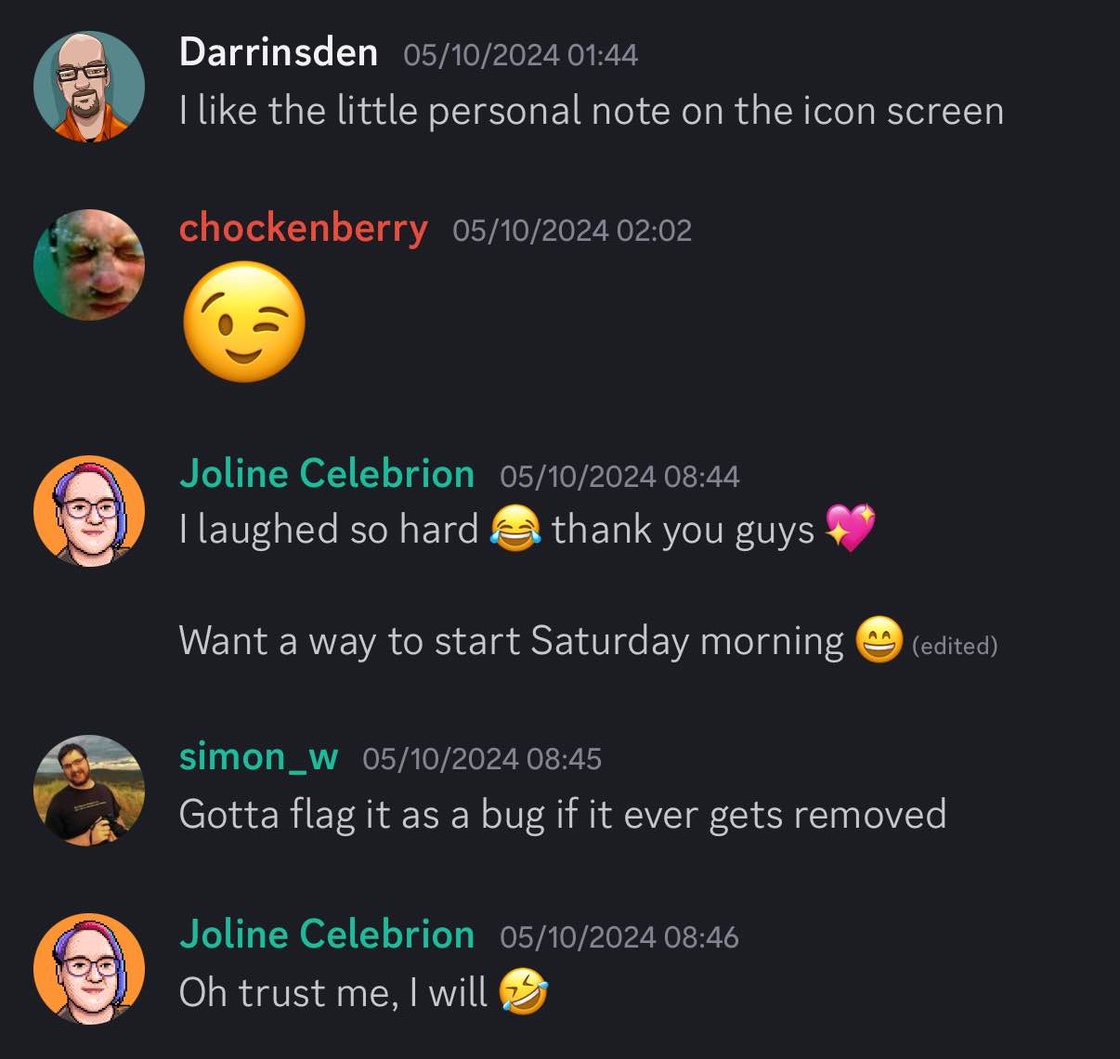

And when she launched the app:

But we had to deal with that #warning and remove the message in the released product. And I knew it would immediately generate a bug report.

Good developers are proactive, especially when it comes to about boxes. And about boxes are branded with an icon. And on the factory floor, there is no shortage of icons. So I had my workaround: Joline was getting a disco.

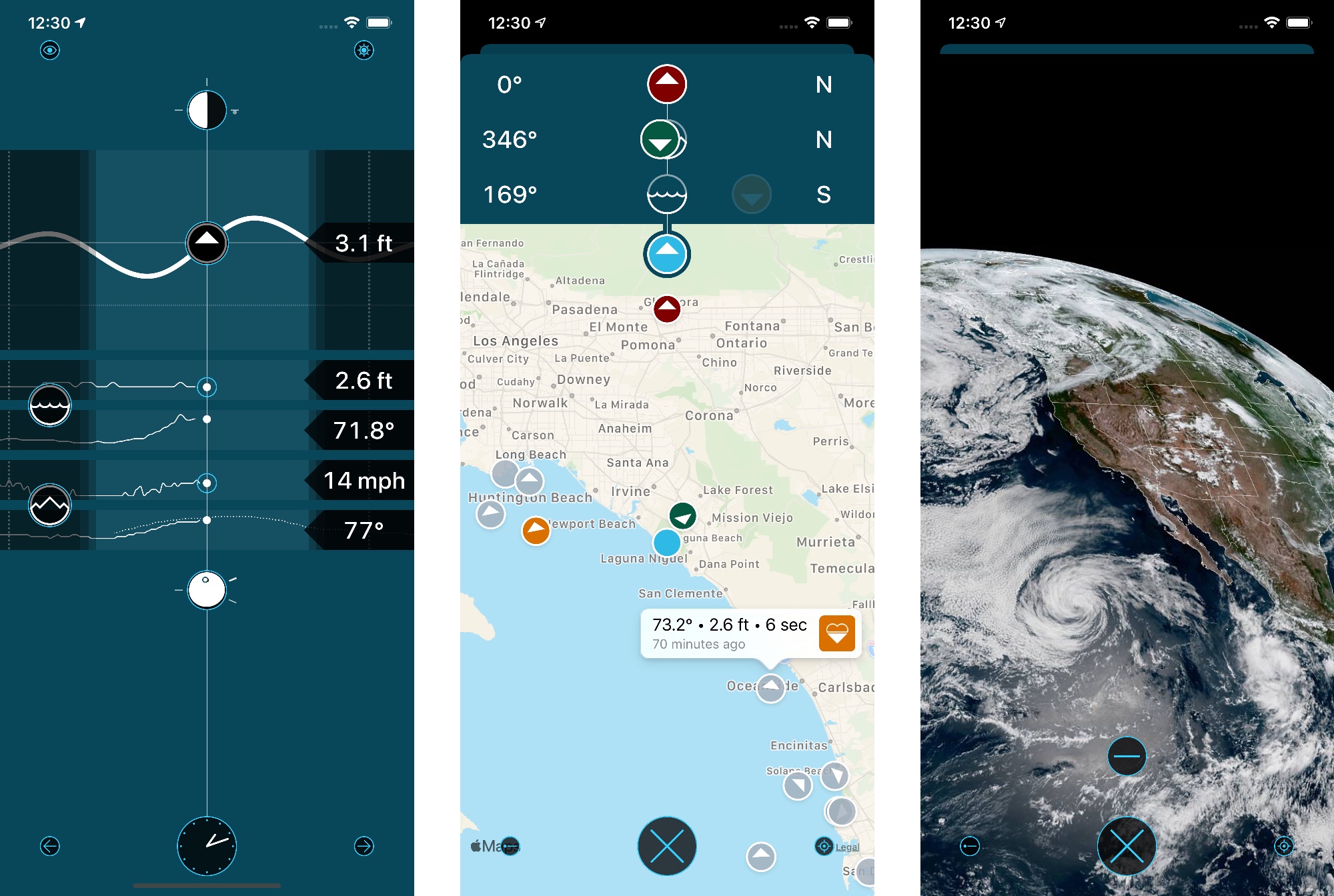

The first step was to take all the icons and cycle through them to get a nice colorful flashing effect. That went out in a beta release and I hinted about it on Discord. Joline and everyone else loved it.

But that was just an amuse-bouche. I couldn’t close the bug report unless it had her name in it. I’d also been meaning to learn about the new TextRenderer modifier and protocol: I had my excuse to spend time learning and having fun.

Another important piece of the puzzle was knowing it was her tapping the icon. Luckily Kickstarter backers register their reward in the app so we had enough information to display everyone’s first name in the about box. I got to close a bug report and all our Kickstarter backers got a fun little bonus: that’s a win-win!

But it’s still Joline’s Icon Disco. She just lets everyone else visit and pretend otherwise :-)

And if you think these are the only Easter eggs, well, let’s just say that the best part of making software fun is watching folks discover the weird things we come up with!

Like if you find yourself tapping twice on the product website’s wordmark. Repeatedly!